Decoding Artificial Intelligence (AI) : Your Simple Cheat Sheet

Artificial intelligence (AI) is the buzzword of the decade. Every tech company is touting its advancements in AI, but with all the jargon, it can be hard to grasp what’s really happening. To help you navigate this complex field, we’ve compiled a cheat sheet covering some of the most common Artificial Intelligence terms. By the end of this article, you’ll have a clearer understanding of what these terms mean and why they’re important.

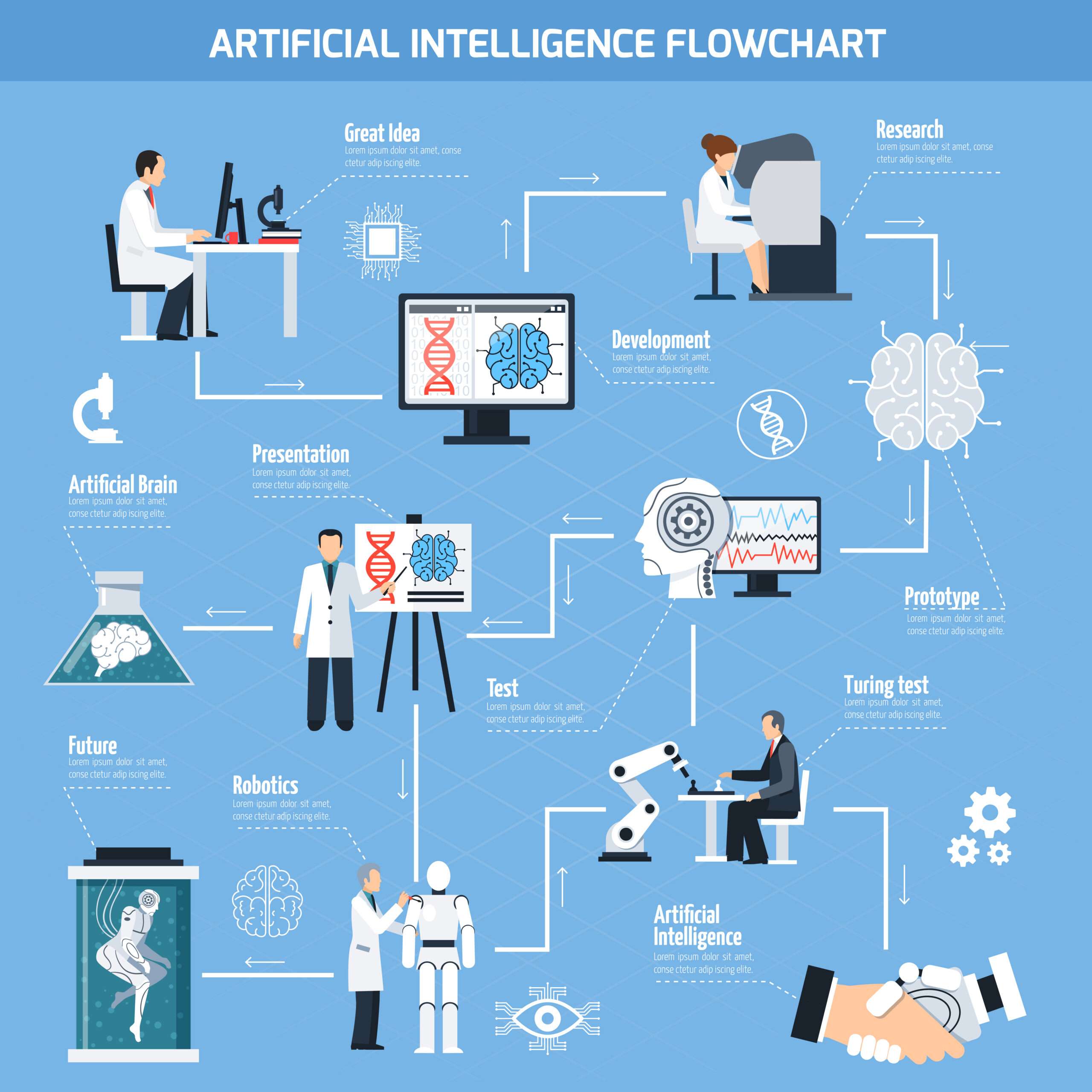

What Exactly is Artificial Intelligence?

Artificial Intelligence: AI refers to the field of computer science focused on creating systems that can perform tasks that typically require human intelligence. While AI is often discussed as a technology or even as a sort of entity, its true definition can be slippery, especially when it’s used as a marketing buzzword. Companies like Google, Meta, and OpenAI often use AI to refer to everything from underlying models to specific products, which only adds to the confusion.

Key Artificial IntelligenceTerms You Should Know

Machine Learning (ML): This is a subset of Artificial Intelligence where systems are trained on data to make predictions or decisions without being explicitly programmed. Think of it as teaching a computer to learn from experience.

Artificial General Intelligence (AGI): This is Artificial Intelligence that matches or surpasses human intelligence. AGI is a hot topic because of its potential to revolutionize industries — and also because of the ethical concerns it raises, like the fear of AI becoming too powerful.

Generative AI: This type of Artificial Intelligence can create new content, such as text, images, or code. If you’ve ever used ChatGPT or Google’s Gemini, you’ve seen generative AI in action. These tools generate responses based on vast amounts of data they’ve been trained on.

Hallucinations: In the context of AI, hallucinations refer to when an Artificial Intelligence generates information that isn’t accurate or doesn’t make sense. Because these tools rely on their training data, they sometimes produce confident but incorrect answers.

Bias: AI systems can reflect the biases present in their training data. For instance, research has shown that some AI tools have difficulty accurately identifying the gender of darker-skinned individuals due to biased datasets.

Understanding AI Models

AI Models: These are the algorithms trained on data to perform specific tasks. For example, a model might be trained to recognize images, translate languages, or generate text.

Large Language Models (LLMs): A specific type of AI model designed to understand and generate human language. GPT-4 and Claude are examples of LLMs that can hold conversations and produce text.

Diffusion Models: Used in generating images from text, these models learn by first adding noise to images and then reversing the process to produce clear outputs. This technique is also applied to audio and video.

Foundation Models: These are large, general-purpose models trained on vast datasets. They can be adapted for various tasks without specific training. OpenAI’s GPT and Meta’s Llama are examples.

Frontier Models: A marketing term for the next generation of AI models, which are expected to be more powerful than current ones.

How Do AI Models Learn?

AI models learn through a process called training, where they analyze datasets to recognize patterns and make predictions. This process requires significant computing power, often provided by GPUs like Nvidia’s H100 chip.

Parameters are the internal variables that an AI model adjusts during training to improve its performance. The number of parameters in a model is often used as a benchmark for its complexity.

Other Key Terms

Natural Language Processing (NLP): This is the ability of AI to understand and generate human language. ChatGPT is an example of an NLP tool.

Inference: The process by which an AI model generates outputs, like when ChatGPT writes a response to your question.

Tokens: These are pieces of text that AI models break down to analyze and generate language. The more tokens a model can handle, the more sophisticated its outputs.

Neural Network: A computing system modeled after the human brain’s network of neurons. Neural networks are fundamental to modern AI, enabling systems to learn complex patterns.

Transformer: A type of neural network architecture that has revolutionized AI by enabling more efficient training and better performance, particularly in language tasks.

RAG (Retrieval-Augmented Generation): This technique enhances AI accuracy by allowing models to pull in external data during inference, reducing the likelihood of hallucinations.

The Hardware Behind AI

AI models run on powerful hardware, with GPUs like Nvidia’s H100 being among the most sought-after. Neural Processing Units (NPUs) and terms like TOPS (trillion operations per second) are also relevant when discussing the hardware that powers AI.

Leading Players in the AI World

Some key companies driving AI innovation include:

- OpenAI / ChatGPT: A pioneer in AI, known for its conversational AI model, ChatGPT.

- Microsoft / Copilot: Microsoft integrates AI across its products, often in partnership with OpenAI.

- Google / Gemini: Google’s AI efforts are centered around Gemini, which powers various tools and services.

- Meta / Llama: Meta’s open-source LLM, Llama, is central to its AI strategy.

- Apple / Apple Intelligence: Apple is gradually incorporating AI features into its ecosystem.

- Anthropic / Claude: A rising AI company focused on creating safer, more reliable AI systems.

- xAI / Grok: Elon Musk’s latest venture into AI, developing the Grok language model.

- Perplexity: Known for its AI-powered search engine, which has sparked debate over data use.

- Hugging Face: A platform for sharing AI models and datasets, crucial for collaboration in the AI community.

Conclusion

Artificial intelligence is a rapidly evolving field filled with complex terms and concepts. But with this cheat sheet, you should now have a better grasp of the basics. Whether you’re a tech enthusiast, a professional in the field, or just curious, understanding these terms will help you keep up with the fast-paced world of AI.